Abstract

Since its discovery almost 70 years ago, the hologram has been considered to reproduce the most realistic three dimensional images without visual side effects. Holographic video has been extensively researched for commercialization, since Benton et al. at MIT Media Lab developed the first holographic video systems in 1990. However, commercially available holographic video displays have not been introduced yet for several reasons: narrow viewing angle, bulky optics and heavy computing power. Here we present an interactive slim-panel holographic video display using a steering-backlight unit and a holographic video processor to solve the above issues. The steering-backlight unit enables to expand the viewing angle by 30 times and its diffractive waveguide architecture makes a slim display form-factor. The holographic video processor computes high quality holograms in real-time on a single-chip. We suggest that the slim-panel holographic display can provide realistic three-dimensional video in office and household environments.

Similar content being viewed by others

Introduction

A holographic display uses light diffraction to create three-dimensional (3D) images in space1. When real objects and holographic images are located in the same space, they can be perceived without inhomogeneity. Figure 1 shows how the holographic image can be shown with a real human hand. Both the fairy image and the hand are 0.3 m away from the screen. With a holographic display, one can see the fairy image and the hand clearly focused without having any uncomfortable feeling. On the contrary, conventional stereoscopic 3D images using only binocular parallax and vergence are recognised as images at the screen2,3. One cannot clearly see the fairy image and the real hand at the same time, and will experience visual fatigue caused by an accommodation–vergence conflict2. Therefore, holographic displays are essential parts of future video systems including interactive 3D user interfaces.

The holographic image and the human hand are at the same distance from the camera. It provides natural depth perception and prompts a viewer to focus on the object itself, not on the screen. The bulk-optic backlight unit is used to demonstrate the ultimate quality of the holographic image. The movie clip is available in Supplementary Movie 1.

Because of its advantage in 3D image reproduction, the technology for static holograms is quickly developed to a high standard by using hologram recording materials such as silver halide and photopolymer4. Recently, nanophotonics5 and metasurfaces6 are also used to reconstruct static holograms. However, those holographic media are non-updatable or have a limited updating frequency7, causing a fundamental limitation for generating dynamic holograms. By using a spatial light modulator (SLM), which directly modulates the wavefront of light, it is possible to update holograms at video rate. Many researches have demonstrated holographic video systems so far, including anisotropic leaky mode8 and eye tracking9. The recent study on a holographic display was reported, including a full-colour 3D computer-generated hologram (CGH) calculated in real-time, and a focus-adjustable reconstructed image10. However, it has been demonstrated that vertical parallax only holograms using a bulky optical system. It is expected to require quite an amount of time to reach the mobile holographic video for practical applications.

To build a mobile holographic video display, the following barriers need to be overcome. First of all, there is the limitation of the space-bandwidth product (SBP), which determines both the size of holographic image and the viewing angle. The static holographic media can produce large holographic images with a large viewing angle, because the information of hologram is recorded in the sub-wavelength density and it can be recorded on the large size film. However, SBP is limited by the pixel size and the number of pixels when an SLM is used as a dynamic holographic medium. The SBP of the currently available SLM is generally a few hundred times less than the SBP of the static holographic media. It means that only a small size or a narrow viewing angle dynamic hologram can be realised. Second, to generate a large coherent backlight, complicated optical components and a considerable space is required for the manipulation of light. It is difficult to realise a holographic video display as slim as flat-panel displays commercialised nowadays. Last, the calculation of hologram in real-time typically requires huge computational cost, and the amount of computation increases as the SBP increases. Several studies have been carried out to optimise algorithms and to increase computation speed, but they still require clustered processors or high-performance parallel processing systems to calculate high-quality hologram at video frame rate11,12,13,14,15,16.

In this research, a real-time interactive slim-panel holographic video display is demonstrated for the first time. It resolves all the above issues of low SBP, bulky optical system, and enormous computational cost. To increase effective SBP (Supplementary Note 1), a steering-backlight unit (S-BLU), which consists of a coherent-BLU (C-BLU) and a beam deflector (BD) is introduced. The effective SBP is increased by 30 times compared to the original value by using the S-BLU. It is the highest SBP ever achieved for a real-time holographic video system using an SLM. All the optical components are designed and fabricated as a slim structure. To generate 4-K (3840 × 2160) ultra-high-definition (UHD) pixel holograms in real-time, a holographic video processor is implemented on a single-chip field programmable gate array (FPGA).

Results

Beyond SBP

In holographic display, SBP can be represented by the equation, W × θ = λ × N, where W is the image size, θ is the viewing angle, λ is the wavelength, and N is the number of pixels. For a given number of controllable pixels, the maximum size of holographic image and the viewing angle are always trade-off relation. For example, an SLM with full high-definition (FHD) resolution can provide the viewing angle of 0.25° in 10-in. display or 30° in 0.1-in. display. For satisfying both large size and large viewing angle, the SBP should be expanded by increasing the number of pixels N. There are studies to increase the number of pixels N by tiling many SLMs17 or by increasing the resolution of a single SLM18. To achieve the 30° viewing angle in 10-in., 221-K horizontal resolution is required, which is ~100 times higher resolution than the FHD resolution. Apart from the difficulty in fabricating such an SLM, the computation of the CGH in real-time may not be feasible, because the total number of pixels increases ~13,000 times.

In this research, we expand the effective SBP without increasing the number of pixels of the SLM. To overcome the small viewing angle of a large size SLM, we introduce S-BLU, which is able to tilt the angle of the backlight for reconstructing holograms as shown in Fig. 2. Since the S-BLU steers the backlight and can deliver the holographic image to a desired direction, the viewing angle can be effectively expanded for an observer by the amount of maximum steering angle of S-BLU.

The BD, one of the key elements of S-BLU, plays the role of steering a beam. The BD consists of transmission phase arrays, which can provide the linear phase profile for tilting the transmission angle. By using the BD, an extra enhancement factor is added to the original SBP equation W × θ = λ × N(1 + pSLM/pBD). The enhancement factor proportionally increases with the ratio of pixel pitches between SLM and BD. In our holographic video system, the pixel pitch of the BD, pBD, is designed as 2 µm while the pixel pitch of the SLM, pSLM is 58 µm. Then, the effective SBP is increased ~30 times without increasing the number of pixels in the SLM. Since the pBD can be reduced further independent to the SLM, using a BD is more scalable mean to expand SBP than directly increasing the number of pixels of the SLM.

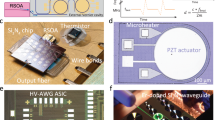

S-BLU architecture and holographic video processor

The S-BLU is the key component for expanding the viewing angle of holographic display. In Fig. 3a, the S-BLU consists of the BD (Supplementary Note 2) and the C-BLU (Supplementary Note 3). The BD steers light19 and the C-BLU20 forms the uniform wavefront incident from the BD as shown in Fig. 3b, c. Three sets of BDs have been used for each R/G/B colour. Each BD set consists of two pairs of linear phase arrays based on liquid crystals for both eyes. Each pair can steer light along the horizontal and the vertical directions.

a Optical architecture consists of beam deflectors, coherent-backlight units, a geometric phase lens and a spatial light modulator. b Principle of the beam deflector, which steers the transmitted lights optically like a prism: the vertical and horizontal phase arrays steer light up to ~15° with the angular resolution of 0.02° at the wavelength of 520 nm. c Configuration of the coherent-backlight unit using waveguide: the first waveguide for both red and green light and the second waveguide for blue light are stacked together to increase the overall efficiency. d The holographic video processor is implemented on a single-chip FPGA.

In a typical beam steering, the maximum steering angle is reduced as the area of the light source increases due to the etendue. In our holographic video system, the etendue issue has been solved by using the waveguide structure of the C-BLU. Once the beam size of 14 mm × 140 mm is steered as wanted by the BDs, it is enlarged to 140 mm × 230 mm by duplicating inside of the waveguide without reduction of the maximum steered angle.

One disadvantage of the small viewing angle is a long viewing distance where the diffracted lights from all pixels start to overlap. Thus, a lens is necessary to reduce the viewing distance by gathering those diffracted lights together. The minimum viewing distance is, D = 0.5 × L/tan(θ/2), where L is the horizontal length of the display and θ is the viewing angle. The minimum viewing distance is ~25 m when L is 22 cm (equivalent to 10-in.) and θ is 0.25°. By using a lens of 1 m focal length, the viewing distance is reduced by 25 times. However, a conventional bulk lens is not appropriate for the slim-panel holographic video display in terms of its thickness and weight. The geometric phase lens (GPLs), which exploits the spatial anisotropy21, is implemented as a field lens. The diffraction efficiency of a GPL can reach 98% over the entire visible wavelength. The overall thickness of optical components including the GPL is 1 cm.

For the real-time interactive holographic video display, the 3D image should be updated for the position of the viewer’s eyes which is detected by the eye-tracking sensor (Supplementary Note 4). An application software, such as a graphic rendering software, or a 3D camera generate 3D images that should be projected on the viewer’s retina plane. The 3D image is provided to the holographic video processor in red, green, blue and depth (RGB-D) format. From the image on the retina plane, the optical field distribution on the eye lens plane is computed. Then, from the optical field distributions on the eye lens, the holographic patterns that should be applied to the SLM are computed. For these steps, a layer-based method is used and two-dimensional inverse fast Fourier transforms (2D IFFTs) are heavily used22,23. Layer-based method can generate high-quality holograms for real scenes. However, layer-based method using FFTs has been considered unsuitable for CGH hardware accelerators since FFT processors were difficult to build in large scale15. We built an IFFT-based holographic video processor (Supplementary Note 5) using a highly parallel architecture and several hardware-reducing techniques. Two holographic images are computed at the same time: one for the right eye and the other for the left eye. Later, they are combined into a single hologram22,24.

The holographic video processor consists of three major parts: data propagation unit (DPU), filter and scale unit (FSU), and 32 IFFT processors. The IFFT processors perform 2D IFFT computations required for the calculations of the optical field distribution on the eye lens and the hologram patterns for the SLM. The 32 IFFT processors can compute 32 1-D IFFTs at the same time. The 2D IFFTs are computed with the row–column method. The DPU prepares the data needed for the IFFT computations from various sources including the input RGB-D data. The FSU performs several filtering operations and miscellaneous functions. The FSU also performs pixel encoding, which converts a complex number into an integer, which is provided to the SLM.

The holographic video processor uses fixed-point number representations for all data processing tasks including multiplications in the IFFT. The number of bits used to represent a number is kept at the lowest level. For example, when the Fourier coefficients are stored in the memory, only 32 bits are used to represent a complex number. The holographic video processor is built with a single-chip FPGA. The single-chip FPGA holographic video processor generates binocular holographic colour images with 3840 × 2160 pixels at the speed of 30 frames per second (fps).

As the system bus, we used Advanced Microcontroller Bus Architecture Advanced Extensible Interface 4 (AMBA AXI4), which is widely used in smartphone application processors. This makes it easy to embed the holographic video processor into a smartphone application processor.

Device implementation

The actual system implementation of the slim-panel holographic video display is shown in Fig. 4. Three colour laser diodes are used as coherent light sources. These laser lights are summed as they pass through BDs and C-BLUs, and the resulting coherent white light is led through a GPL with a focal length of 1 m onto the SLM. A 10.1-in. UHD commercial liquid crystal display (LCD) is used as the amplitude-only SLM.

The prototype includes optical components (beam deflectors, coherent-backlight units, and a geometric phase lens), a holographic video processor board, a spatial light modulator, power connectors, and other miscellaneous components. The thickness of the display parts is 1 cm, including coherent-backlight units, a geometric phase lens, and a spatial light modulator.

Two experiments are conducted to validate the dynamic colour holographic display. First, the accommodation effect is checked by placing voxels in the air with different depths. Second, a full-screen colour holographic video is played. Each scene consists of 8 million voxels and it is refreshed at 30 fps. The CGH is calculated by the single-chip holographic video processor from a 3D scenery source rendered in the Unity platform.

As shown in Fig. 5a, the voxels are placed along Z-axis with different X–Y positions, so that overall shape forms the letter S, when they are seen at Z = 1 m position. By changing the focal plane of the camera at the distance of 0 and 0.3 m, different images are captured as shown in Fig. 5c, d. Figure 5b shows the diameter of voxels along with various focal planes of camera. The distance of the minimum diameter of each voxel corresponds to the depth of its desired position. This proves that the holographic display provides the correct accommodation effect.

a The coloured voxels are placed along Z-axis with various X–Y positions where whole voxels can be seen as letter ‘S’. b Measured diameters of each voxels are plotted for different focal planes. c, d The holographic images are taken at the two focal planes of Z = 0 and 0.3 m, respectively. The diameter of blue voxel is the minimum at Z = 0, while the diameter of red voxel is the minimum at Z = 0.3 m, which provides the correct accommodation effect.

As the depth of voxel increases, a minimum diameter of voxel slightly increases due to the finite spatial coherence of the backlight. The contrast of the letter S is ~200. Unlike the other SBP improving method25, the contrast ratio does not change regardless of the number of voxels in our case.

Figure 6 shows still shots taken from the dynamic holographic video display in Supplementary Movie 2. The scene is about a turtle swimming under the sea. The corals are placed 0.1 m behind the display and the hill is far behind the display panel, while the turtle and other fish move all around the space. Since the depths of all objects are different, the sharpness of holographic objects changes as the camera focus changes. The top-right inset in Fig. 6a shows that the coral is clearer than the turtle, while the inset in Fig. 6b shows that the face of the turtle becomes clear when the camera focuses it. It demonstrates the unique feature of hologram providing the correct accommodation effect as real objects do, which has not been realised by conventional 3D displays. The turtle is manually controlled along any direction by using a keypad. It proves the dynamic holographic image is updated in real-time using the holographic video processor.

Photos are taken from a holographic video display when it is focused. a 0.1 m behind the display and b 0.35 m in front of the display: for the comparison between the images, expanded images are shown in the upper right corners respectively. The movie clip is available in Supplementary Movie 2, where interactive holographic colour images are shown at 30 fps.

Discussion

In this paper, a novel slim-panel holographic video display is proposed mainly on a new S-BLU architecture and a single-chip real-time holographic video processor. The S-BLU expands the effective SBP by 30 times, while the holographic video processor calculates high-quality CGH at 30 fps. The overall system thickness is <10 cm. The thickness of the display parts is 1 cm. Based on the combination of these, we demonstrated the world’s first slim full-colour holographic video display using 10.1-in. conventional UHD LCD. Research is in progress to scale down the volume of the system suitable for mobile phones. The holographic video processor is also designed to be embedded in a mobile application processor. The proposed system will accelerate the adoption of the holographic video for mobile devices.

Data availability

All relevant data that support the findings of this work are available from the corresponding author upon reasonable request. Source data are provided with this paper.

References

Gabor, D. Holography, 1948–1971 [Nobel Lecture, December 13, 1971]. Sov. Phys. Uspekhi 16, 5–30 (1973).

Kim, J., Kane, D. & Banks, M. S. The rate of change of vergence-accommodation conflict affects visual discomfort. Vis. Res. 105, 159–165 (2014).

Shibata, T., Kim, J., Hoffman, D. M. & Banks, M. S. The zone of comfort: Predicting visual discomfort with stereo displays. J. Vis. 11, 11 (2011).

Colburn, W. S. & Haines, K. A. Volume hologram formation in photopolymer materials. Appl. Opt. 10, 1636–1641 (1971).

Sun, J., Timurdogan, E., Yaacobi, A., Hosseini, E. S. & Watts, M. R. Large-scale nanophotonic phased array. Nature 493, 195–199 (2013).

Ni, X., Kildishev, A. V. & Shalaev, V. M. Metasurface holograms for visible light. Nat. Commun. 4, 2807 (2013).

Blanche, P.-A. et al. Holographic three-dimensional telepresence using large-area photorefractive polymer. Nature 468, 80–83 (2010).

Smalley, D., Smithwick, Q., Bove, V., Barabas, J. & Jolly, S. Anisotropic leaky-mode modulator for holographic video displays. Nature 498, 313–317 (2013).

Häussler, R., Schwerdtner, A. & Leister, N. Large holographic displays as an alternative to stereoscopic displays. Proc. SPIE 6803, 1–9 (2008).

Häussler, R., Gristal, Y., Zschau, E., Missach, R., Sahm, H., Stock, M. & Stolle, H. Large real-time holographic 3D displays:enabling components and results. Appl. Opt. 56, F45–F52 (2017).

Shimobaba, T., Kakue, T. & Ito, T. Review of fast algorithms and hardware implementations on computer holography. IEEE Trans. Ind. Inf. 12, 1611–1622 (2016).

Tsang, P. W. M. & Poon, T. C. Review on the state-of-the-art technologies for acquisition and display of digital holograms. IEEE Trans. Ind. Inf. 12, 886–901 (2016).

Chen, J. S. & Chu, D. Realization of real-time interactive 3D image holographic display [Invited]. Appl. Opt. 55, A127–A134 (2016).

Niwase, H. et al. Real-time electroholography using a multiple-graphics processing unit cluster system with a single spatial light modulator and the InfiniBand network. Opt. Eng. 55, 093108-1-093108-6 (2016).

Sugie, T. et al. High-performance parallel computing for next-generation holographic imaging. Nat. Electron. 1, 254–259 (2018).

Corda, R., Giusto, D., Liotta, A., Song, W. & Perra, C. Recent advances in the processing and rendering algorithms for computer-generated holography. Electronics 8, 556 (2019).

Sasaki, H. et al. Large size three-dimensional video by electronic holography using multiple spatial light modulators. Sci. Rep. 4, 6177 (2014).

Lee, H. S. et al. Large-area ultra-high density 5.36” 10Kx6K 2250 ppi display. SID Dig. 49, 607–609 (2018).

Kim, Y. et al. Electrically tunable transmission-type beam deflector using liquid crystal with high angular resolution. Appl. Opt. 57, 5090–5094 (2018).

Choi, C. et al. Ultra-slim coherent backlight unit for mobile holographic display. Proc. SPIE 9556, 955606 (2015).

Kim, J., Li, Y., Miskiewicz, M. N., Oh, C., Kudenov, M. W. & Escuti, M. J. Fabrication of ideal geometric-phase holograms with arbitrary wavefronts. Optica 2, 958–964 (2015).

Kim, H. et al. A single-chip FPGA holographic video processor. IEEE Trans. Ind. Electron. 66, 2066–2073 (2018).

Im, D. et al. Phase-regularized polygon computer-generated holograms. Opt. Lett. 39, 3642–3645 (2014).

An, J. et al. Binocular holographic display with pupil space division method. SID Symp. Dig. Tech. Pap. 46, 522–525 (2015).

Yu, H., Lee, K., Park, J. & Park, Y. Ultrahigh-definition dynamic 3D holographic display by active control of volume speckle fields. Nat. Photon. 11, 186–192 (2017).

Author information

Authors and Affiliations

Contributions

J.A. designed the overall optical architecture, system integration, and wrote the initial draft of the manuscript. K.W. performed the BD design and wrote and revised the manuscript with input from all other authors. Y.K. manufactured and conducted an experiment on the BD and reviewed the manuscript. J.-Y.H. conducted system integration and reviewed the manuscript. H.K., Yongkyu Kim, and H.P. conducted the holographic video processor. H.S. designed the overall system architecture and conducted system integration. C.C. and Yunhee Kim manufactured and conducted an experiment on the C-BLU. J.S. conducted system integration and eye-tracking system. A.M. contributed to the BD and C-BLU design. S.H. reviewed the manuscript. Sungwoo Hwang advised and revised the manuscript. K.K. performed a key role in the holographic video processor and revised the manuscript. H.-S.L. initiated and supervised the project and revised the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Source data

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

An, J., Won, K., Kim, Y. et al. Slim-panel holographic video display. Nat Commun 11, 5568 (2020). https://doi.org/10.1038/s41467-020-19298-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-020-19298-4

This article is cited by

-

Integral imaging near-eye 3D display using a nanoimprint metalens array

eLight (2024)

-

Color liquid crystal grating based color holographic 3D display system with large viewing angle

Light: Science & Applications (2024)

-

Waveguide holography for 3D augmented reality glasses

Nature Communications (2024)

-

Extended depth of field in augmented reality

Scientific Reports (2023)

-

Deep learning-based incoherent holographic camera enabling acquisition of real-world holograms for holographic streaming system

Nature Communications (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.